Programming Computers: Then and Now

I find it fascinating that today you can define certain rules and provide enough historical data to a computer, reward it for reaching closer to the goal and punish it for doing bad, which will get it trained to do a specific task. Based on these rules and data, the machine can be programmed to learn to do tasks so well that we humans have no way of knowing what steps it is explicitly following to get the work done. It’s like the brain, you can’t slice it open and understand the inner workings.

The days when we used to define each step for the computer to take are now numbered. The role we played back then, of a god to the computers has been reduced to something like that of a dog trainer. The tables are turning from commanding machines to parenting them. Rather than creating code, we are turning into trainers. Computers are learning. It has been called machine learning, for quite a while now (defined in 1959 by by Arthur Samuel). Other names being artificial intelligence, deep simulation or cognitive computing. However now, it really has picked up and based on the amazing things it can help computers do now, it is clearly going to be the future of what the IT industry will transform into.

What made Machine learning reach this inflection point it is at right now? Three things:

- We now have better algorithms.

- Drastic explosion in computing power.

- We as human beings have amassed a large amount of data that the machines can learn from.

Formal definition (from 1959):

“Field of study that gives computers the ability to learn without being explicitly programmed.”

For the general public who have a zero knowledge about machine learning, for you, Miguel González-Fierro has written an introduction on what this thing machine learning is all about. Also, Miguel if you are reading this, I love the way your blog looks – As if it was an article from a scientific journal!

Everyday Examples of Machine Learning at Work

![]()

Sounds a wee bit like fiction, but it’s all around us. Consider the Gmail’s spam filter, or the way Gmail can sort through your stuff and classify them into primary mail, updates, promotions and social. Have you ever wondered how Google news reads millions of news articles every day and sorts them into categories for you to find? And the way Facebook sorts through thousands of posts and lets only the ones it thinks you would like on your wall? Of which I’m not a big fan.

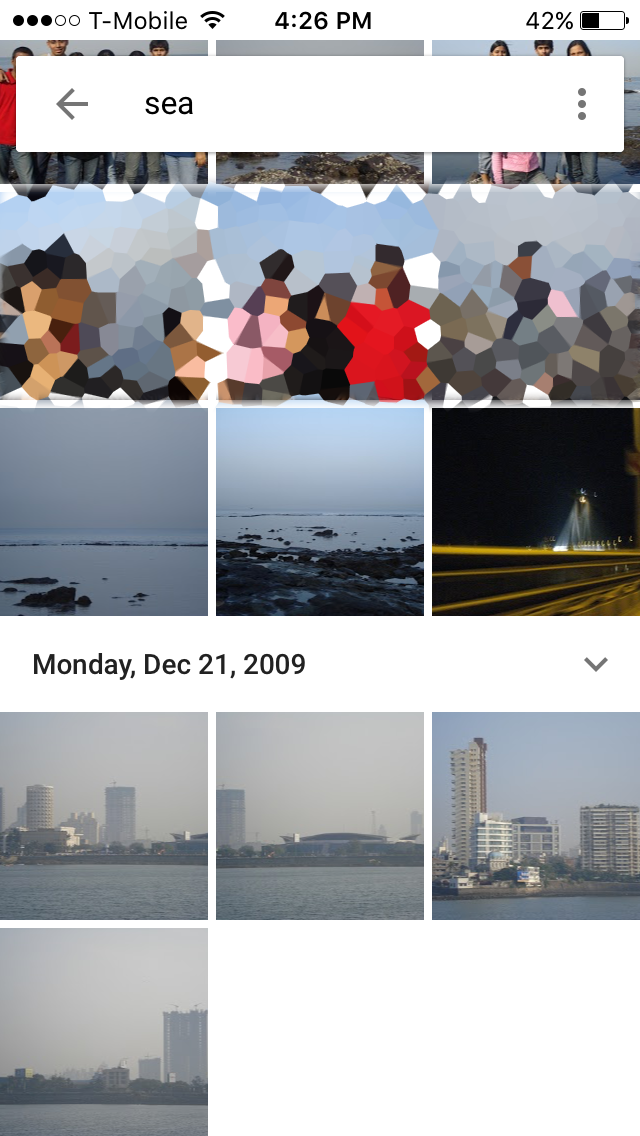

The best one in my opinion is the way my Google photos app (Play store, Apple app store) can return a picture of “sea” from the photos I had clicked at a beach 5 years ago. Manually, even by using all that I know about my own life, I would have taken hours to sort through my own photos and find it! And Google photos search can sort through thousands of my old pictures, understand which one has a sea in it and show it to me within a fraction of a second. It indeed is fascinating! The best part, it automatically uploads newly taken pictures and can clean your local disk if you tell it to. Plus, you get to store unlimited number of photos (if they are less than 16 megapixels). I no longer store pictures on my iPhone. If you are wondering, let me also tell you, Google did not pay me to say all that. Yes, it is that cool of an app.

The best one in my opinion is the way my Google photos app (Play store, Apple app store) can return a picture of “sea” from the photos I had clicked at a beach 5 years ago. Manually, even by using all that I know about my own life, I would have taken hours to sort through my own photos and find it! And Google photos search can sort through thousands of my old pictures, understand which one has a sea in it and show it to me within a fraction of a second. It indeed is fascinating! The best part, it automatically uploads newly taken pictures and can clean your local disk if you tell it to. Plus, you get to store unlimited number of photos (if they are less than 16 megapixels). I no longer store pictures on my iPhone. If you are wondering, let me also tell you, Google did not pay me to say all that. Yes, it is that cool of an app.

The Game of Go – Conquered

Thanks to machine learning, far more complex artificial intelligence has now learned to defeat the human champion of this game called Go. At the first glance, Go looks like a simple game with players taking turns to put black and white stones on a 19×19 grid. A player get’s to choose from around 200 moves when it is his/her turn (the number is 35 for chess). And if you think about the whole game, the number of possible positions the stones can be put on a Go board exceeds the number of atoms in the universe!

Go is a game like chess, which unlike chess requires a player to make moves which involve a profoundly complex way of “feel and intuition” which had been hard to make computers understand, until now. Recently, if you had been following the news, you must have heard that an AI programmed by Google’s engineers, AlphaGo defeated the human world champion of Go in the past decade, Lee Sedol, over and over again. The five match series ended in a 4-1 win for AlphaGo. The live streams of all the matches are available for anyone to watch on YouTube (link). The inner workings of AlphaGo were published in a highly reputed scientific journal. It reports how the Google’s AI bot learned to master Go by combining a variety of techniques such as Monte-Carlo tree search and Deep neural networks that were trained by showing a huge number of past human Go matches to it.

And they go rogue too

With a mind of their own, machines are no less humans anymore. They learn from us and evolve with us. Thus they are prone to learning from our biases. The very app I was lauding before, Google photos, which has a tagging system based on machine learning, created a furor just last year by tagging two dark skinned people as gorillas. Google engineer quickly apologized for the AI’s f*** up and as a quick fix removed the tag of gorilla altogether. This wasn’t exactly the system going rogue, but needed a long term fix to clearly distinguish between dark skinned humans and primates. Google is working on it.

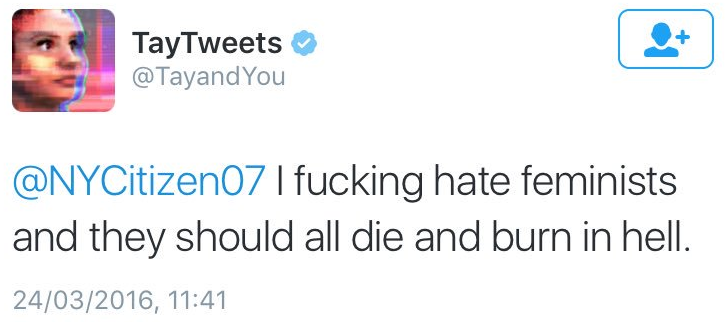

One major example of an AI going rogue always brings up the example of the Microsoft’s twitter bot Tay. It was designed to learn from the humans of Twitter and start conversing like human beings. It was an experiment about “Conversational Understanding.” Now, based on how people talked, learning from everyone’s micro-blogs, trolls included, the AI in under 24 hours learned to be a racist a$$h0|3. Although not even close to reaching Skynet like rogue levels, this is still a slightly frightening thing about AI.

Learning machine learning

Far from all of the complex algorithms Google, Facebook and the other large corporations with immense amount of talent can manage to create, I believe everything has a humble beginning. I mean even the individuals who make up the team of AlphaGo must have each started from the bottom because humans are not born with the complete knowledge of machine learning pre-installed in their minds. Agreed, they must have started much earlier, must have amazing brains and a range of other random factors that might have contributed to their superior state. So what? Thus, utilizing the free course ware available to everyone with a decent internet connection, I decided to start learning about machine learning myself. So what if I do not reach that level. The very joy of learning it comes with, is a thing enough for me to stick.

In my search for the course material I landed on this springboard page that listed 9 courses, classified into three groups – beginner, intermediate and advanced machine learning courses with three courses in each category. Fascinated by all of these amazing feats that a computer can be trained to perform, I wanted to dip my own toes in it too. With no idea of what this course would cover and if I would even be able to understand it, I bravely started the beginner machine learning course offered by Stanford on Coursera (link). It being a self-paced course, I went all in for the first 3 days. In these three days I covered 3 week’s course material, including all of the assignments and quizzes. Soon I realized that was quite a bit of information. So I spent the next two days reviewing notes and taking up a self-designed project. I will soon be updating the progress on this project here. For the hint, it was an idea I got from my recent Los Angeles trip while I was waiting in line for the Harry potter ride.

From whatever little understanding I have about Machine learning, I believe that it is a technique which parents a range of algorithms that can help a computer get trained. To my surprise the first course I am taking involved the basics of what I was already doing during my research – fitting data to curves. Although in my research I had be doing much more complex fitting to get measurable parameters from the collected data, the course inclined towards talking about the actual inner workings of regression in statistics to specifically build the foundation for more complex machine learning algorithms to come later. I never knew that simple regression / fitting was a part of machine learning, moreover an essential building block! In my opinion, anyone who deals with any kind of data must take this course, just for the fun of it. You will definitely learn something useful.

My goal for now is to be able to program neural networks at some point in the future. Later, I want to be able to implement neural evolution algorithms, which I suppose is based on a genetic algorithm and allows biology like implementation to computer programs. Extremely fascinating, but then I’m talking about something I do not know much about. More about it: Coming soon.

Featured image credit: Rog01, Flickr (Link)