Our eye doesn’t work like a camera – with pixels and frame rates. It moves rapidly in small amounts and continuously updates the image to “paint” the detail. Also, since we have two eyes, both the signals are combined by the brain to increase the resolution further. Due to this, a much higher resolution image than possible with the eye’s abilities, can be generated in the brain. The very fact that we haven’t been able to come up with artificial devices that work the way a human eye does, confirms that we haven’t been completely able to understand this complex device yet.

But what we know about the average human eye is that its ability to distinguish between two points is measured to be around 20 arcsecs. That means, two points need to subtend an angle of at least 0.005 degrees to be distinguished by the human eye. Points lying any closer than that would mean that the eye would see it as a single point.

One thing needs to be noted that if an object subtends 0.005 degrees when it lies 1 foot away, it will subtend a lesser angle as it moves away. This is the reason you have to bring tiny text closer in order to read it. Bringing it closer increases the angle it subtends, only then the eye is able to resolve individual letters. Or in other words, anything is sharp enough if it is far enough.

Apple Science

Retina display, the Apple’s flagship display is said to be so sharp that the human eye is unable to distinguish between pixels at a typical viewing distance. As Steve Jobs said:

It turns out there’s a magic number right around 300 pixels per inch, that when you hold something around to 10 to 12 inches away from your eyes, is the limit of the human retina to differentiate the pixels. Given a large enough viewing distance, all displays eventually become retina.

Basically, Apple has done science at home and has come out with a nice number, 300 PPI. Practically, you don’t need anything higher than that. Technically, you do.

Isn’t “more” better?

No one is really sure. According to my calculations, an iPhone 5s’s display (3.5X2 in) would subtend 13.3 degree X 7.6 degrees from a 15 inch distance. With the kind of resolving power our eye sports, you’d need a screen that is able to display 4 megapixels on that small screen. Or in layman words, you need a screen that can pack around 710 PPI; practically, that sounds a bit too extreme (or maybe my calculations are wrong, please point it in the comments). I’d go with Steve Job’s calculation.

My shitty screen is a retina display

So, technically any device can said to be sporting the most touted screen in the industry today – a retina display – if it is kept at a sufficient distance. For instance, my laptop’s monitor with a resolution less than one quarters (~110 PPI) of what we see on today’s devices becomes a retina display when I use it from a distance of about 80 cm. 80 cm is normally also the distance I use my laptop from. Also, even doctors consider 50-70 cm as an optimum distance from screen to eye, to avoid eye strain.

On my shitty screen, the pixels are at a distance of 0.23 mm from center to center. And at 80 cm, my eye is practically unable to see the difference between a retina display and a shitty display. So, I say, do you really need higher and higher PPI devices? But that is just my opinion.

My Shitty phone is a retina display

As phones are generally used from a much closer distance, they require a higher PPI for the screen to look crisp. My phone, Lumia 520 has a 233 PPI screen. It becomes a retina display after a distance that is anything more than 15 inches. I’m required to hold my phone at 4 inches more than an iPhone to turn it into a display which is as good as an iPhone’s. Do I bring my phone any closer for anything? No. Do I need a higher PPI? No.

Conclusion

Recent phones from Samsung, Nokia and HTC pack in 316, 332 and 440 ppi, etc or more. Companies are spending billions to decrease the distance between their pixels. Sony, for instance, has recently come up with a 440 PPI display. And now, we have 4K TVs. Practically, I’d say, put an end to this manufacturer pissing contest and use this money for something more worthwhile. Technically, according to calculations, I say that we yet have to develop far more complicated technologies to cram in more pixels for pleasing the human eye.

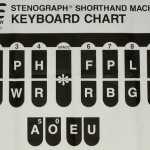

They use a different keyboard which has just 22 keys. There’s no full body QWERTY keyboard and it looks something like this.

They use a different keyboard which has just 22 keys. There’s no full body QWERTY keyboard and it looks something like this.